Test Case Execution and Reporting

Execution of test cases is possible in several ways

directly from the Int4 APITester Cockpit

by using available API’s: REST, SOAP, RFC, SAP GUI Transaction, read here for more details: API to integrate Int4 Suite with other software

by running SAP GUI dedicated transaction

/INT4/IFTT_RUN- this also allows scheduling test runs in the background, read more about background scheduling here: Background processing

Please note:

Customer is not allowed to excecute test cases on a productive systems

Triggering test execution from Int4 APITester Cockpit

Start with selecting a single folder or a set of folders containing the test cases or test scenarios to be executed. List of relevant test cases will open on the right hand side of the screen.

![]() From this moment, all test cases can be executed by clicking the “Execute All” button on the screen.

From this moment, all test cases can be executed by clicking the “Execute All” button on the screen.

It is possible to select specific test cases, using the checkbox on the left of the test cases. In such case, the “Execute All” button changes to “Execute Selected”

The Test Run report is automatically open once the tests are executed from the APITester Cockpit. To access Test Run reports of tests scheduled in background or executed via the API’s, please use the Test Runs Report, as documented here: Test Runs Report

If the Execute button is not visible, make sure to switch off the Edit mode by clicking “Save” or “Cancel” on the top of the screen.

Execution with parameters

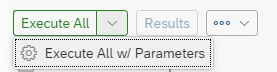

Execution of test cases is controlled by specific settings. If they are not explicitly selected, defaults are used. To control test execution settings, use the down arrow beside the “Execute” button and choose to “Execute w/Parameters”

Execute w/Parameters button in the dropdown

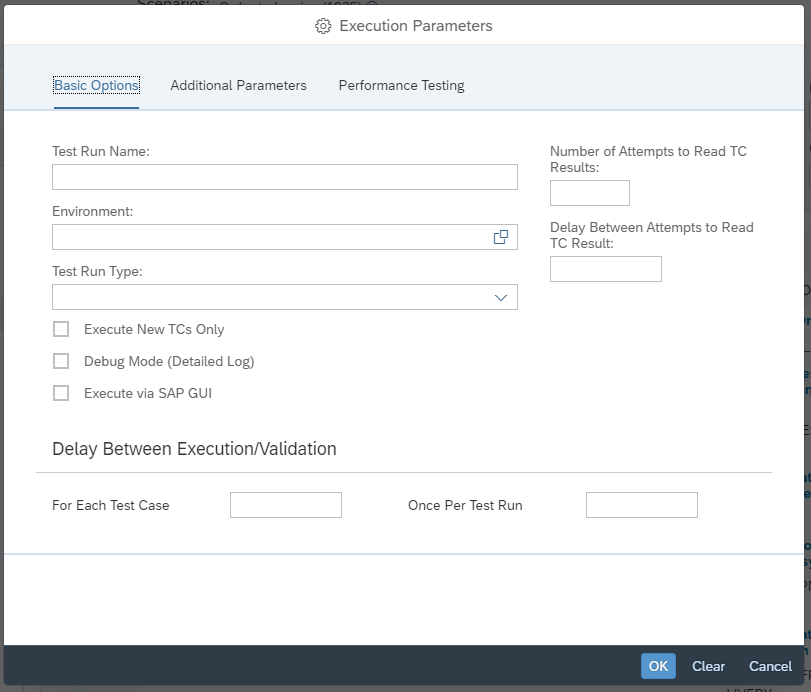

Once selected, the Execution Parameters window pops up.

Execution Parameters window

Basic parameters

Test Run Name - name to identify test run - free form text description, defaults to date and time

Environment - the environment where the test cases should be executed, defaults to the one specified on test case, or on folder level

Test Run type - select the applicable type of test run - used for internal reporting to differentiate between various test runs (e.g. unit testing, regression, test design). Some of the Test Run Types might have special features (e.g. Change Request Test - enables Change Request Payload Validation rules, if they are present)

Execute New TCs Only - will execute only these test scripts which were not yet tested

Debug Mode - provides a detailed log, useful for test design and debugging

Execute via SAP GUI - downloads and runs SAP GUI link, which (on configured system) will start SAP GUI and open the testing transaction. This is needed for certain scenarios which utilize SAP GUI based eCATT Scripts.

Number of Attempts to Read TC Results - the number of times that Int4 Suite will poll for data after test execution

Delay Between Attempts to Read TC Result - delay between each consecutive attempt to read test results

Delay Between Execution/Validation

For Each Test Case - delay between test case execution start and first attempt to read TC results, for each test case

Once Per Test Run - as above, but applied to all test cases in parallel.

The total time that Int4 Suite will be waiting for test execution to be processed will be the sum of Delay Between Execution/Validation and multiplication of Number of Attempts to Read TC Results and Delay Between Attempts to Read TC Result.

Please note that polling integration platform might be computationally expensive in certain networking and platform scenarios. So, if the approximate time for interface processing is known, it makes sense to update the relevant delays and reduce the number of attempts to reduce the load of testing on the platform.

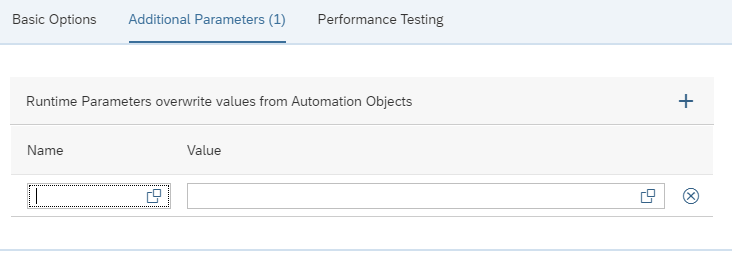

Additional Parameters

Additional Parameters tab in Execution Parameters

For certain scenarios, You might want to override the parameters defined in the Automation Object for a particular test run. Choose the parameter name and provide the new value. Multiple parameters can be altered.

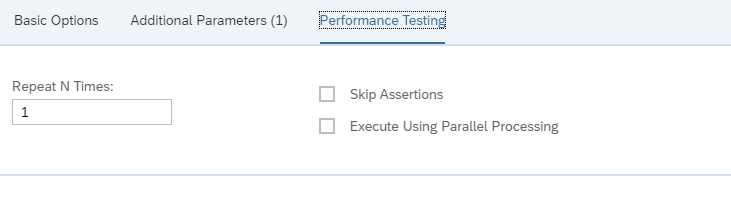

Load / Performance Testing

Performance Testing tab in Execution Parameters

Int4 Suite can be used to test the behaviour of integration platform under load. This is enabled by following features

Repeat N Times - test cases run will be repeated as many times, as specified

Skip Assertions - this will skip the output validation step in test case execution

Execute Using Parallel Processing - normally Int4 starts all test cases in sequence and then captures the output in parallel processing, checking this box will trigger all the tests (excluding the ones which refer a parent) in parallel

Starting test

Clicking “Execute” or confirming the parameters with “OK” will trigger a test run. A new tab opens with Test Run Details

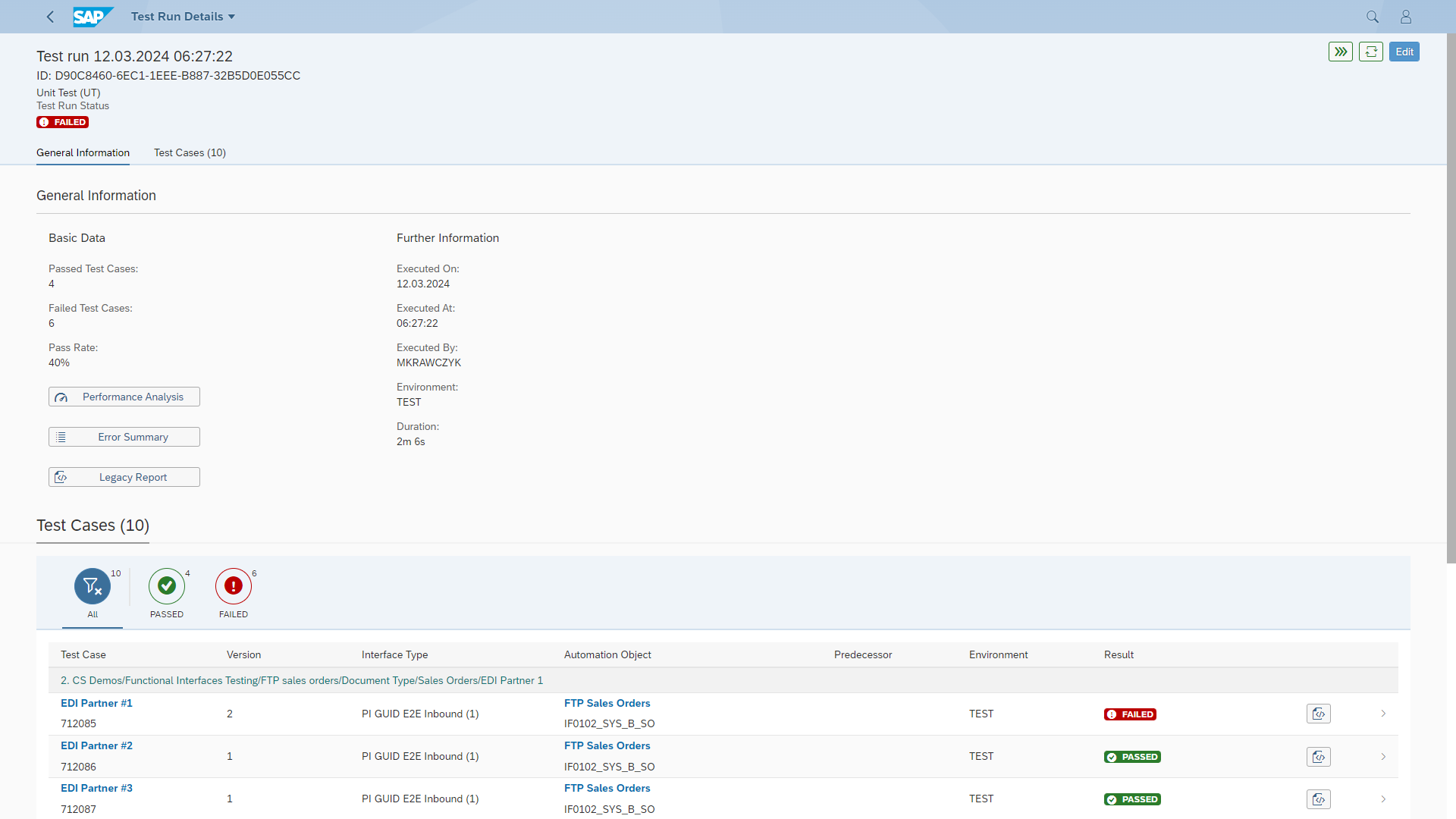

Execution Report - Test Run Summary

The test run summary screen details the specifics of the test run and shows the status, for each test case in the list at the bottom and summary test status on the top.

In the summary section, user can navigate to:

Performance Analysis ( if performance data is available )

Error Summary

Legacy Report

Test Run is successful if all of the test cases in the run are successful. Single failure of test case fails (but not stops) the run.

Test Case information follows the details from the APITester Cockpit. Clicking on the row opens the test details for particular test case.

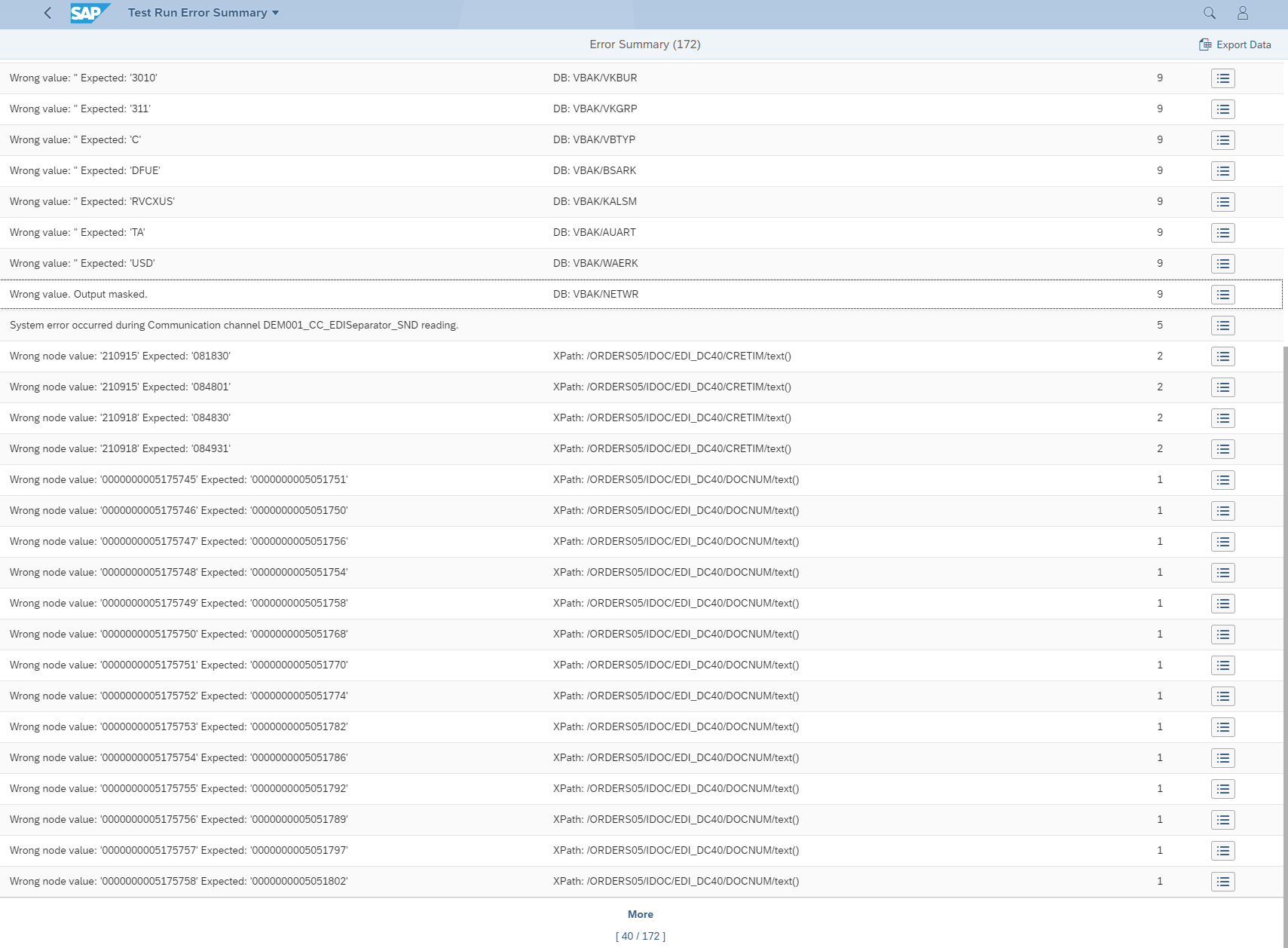

Error Summary

In this section errors from all of the test cases are summed up, aggregated by text and location, showing message count. User can see the list of test cases that include specific message by clicking on a button at the end of the row. Clicking on one of the test cases will navigate to its run details.

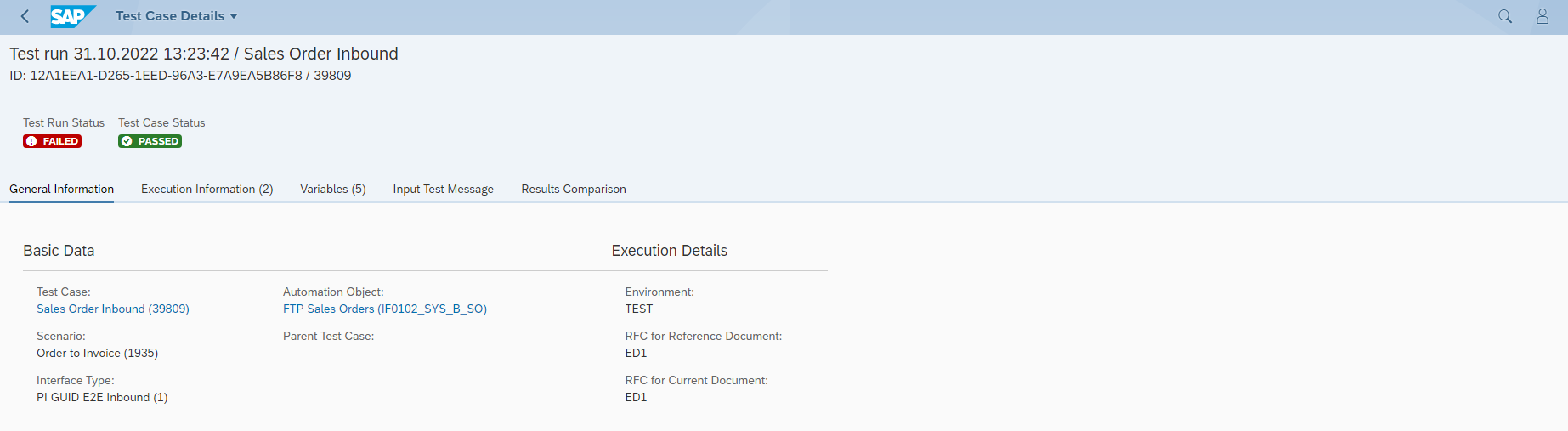

Execution Report - Test Case Run Details

Test Run Report contains extensive details about the test execution. It aims to be used directly as test evidence in change control processes.

Test Case Run details - header and General Information

The Basic Data section contains general details about the test case executed and the execution context.

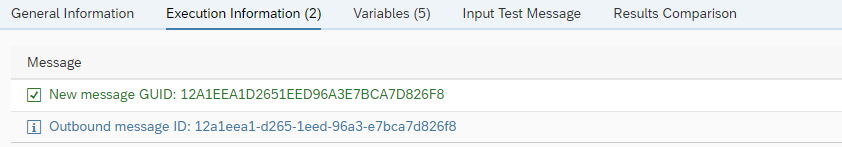

Test Case Run details - Execution Information

Execution Information contains the log of the test execution. In normal mode the log is brief and displays key information to identify the test artifacts (e.g. the message ID’s on the integration platform). Enabling Debug Info in Execution Parameters will provide more technical details on test execution and issues.

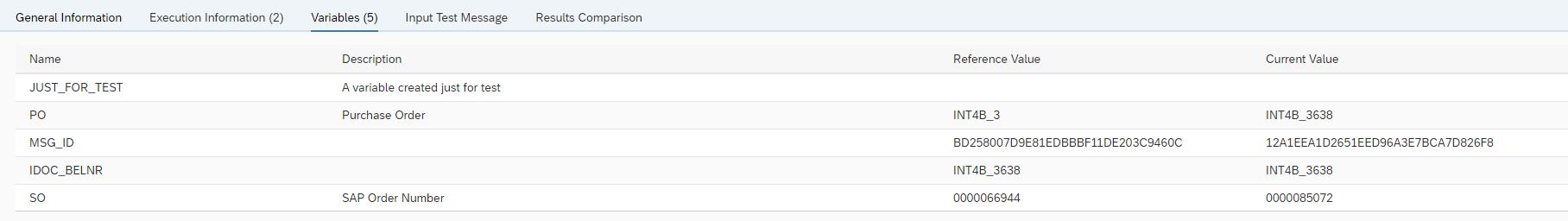

Test Case Run details - Variables

The Variables section contains the list of Variables from the relevant Automation Object with description and values.

Reference value - the value at the beginning of test case execution, usually mapping to the value read out from the test case Payload (from reference document)

Current value - the value at the end of test execution

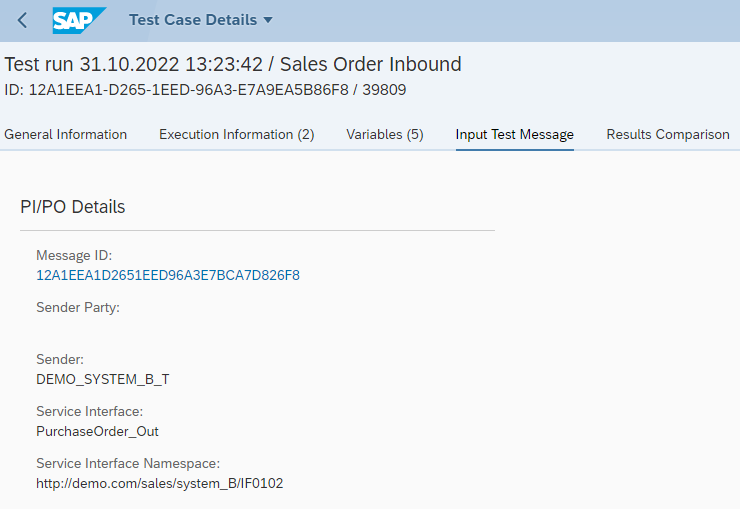

Test Case Run details - Input Test Message

The Input Test Message section occurs for Inbound test types, and references in details the message that was injected into the integration platform

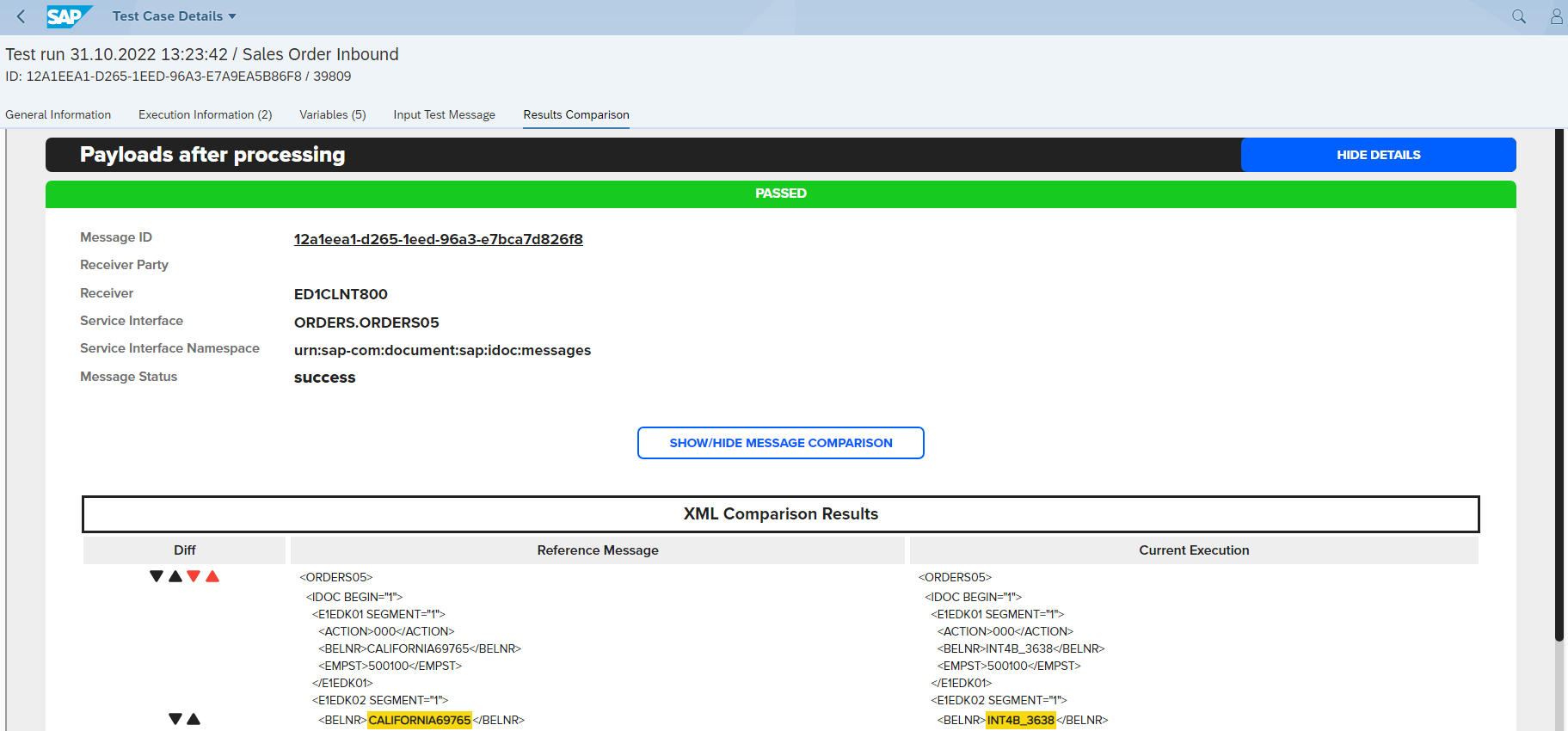

Results Comparison

This is by far the most complex section of the report. It is split in two sections

Payload comparison after processing - this section compares the output of the integration platform to the reference document from the Test Case Payload

SAP Backend Document Validation - if the Automation Object specifies a DB comparison object, Int4 APITester will present the details of comparing the reference document with the once captured in current test execution

Validation of business documents is available in Int4 APITester only

Payloads after processing

Payload Comparison section

Payload Comparison shows the reference message (on the left) and current execution (on the right) in a structurally aligned way, highlighting the differences.

Yellow - difference is acceptable and triggers a warning, the test case will pass

Red - difference is unexpected and triggers an error, the test case will fail

Green - difference is expected, the test case will pass (only for Change Request Test)

The arrows on the left allow to quickly jump to next difference

![]() Black arrows jump to next difference

Black arrows jump to next difference

![]() Red arrows jump to next error

Red arrows jump to next error

Results of the validation are driven by Payload Validation Ignore Rules in the Automation Object definition. Please read here for more details: Automation Objects | Common-Payload/Integration-Platform-Validation-Sections

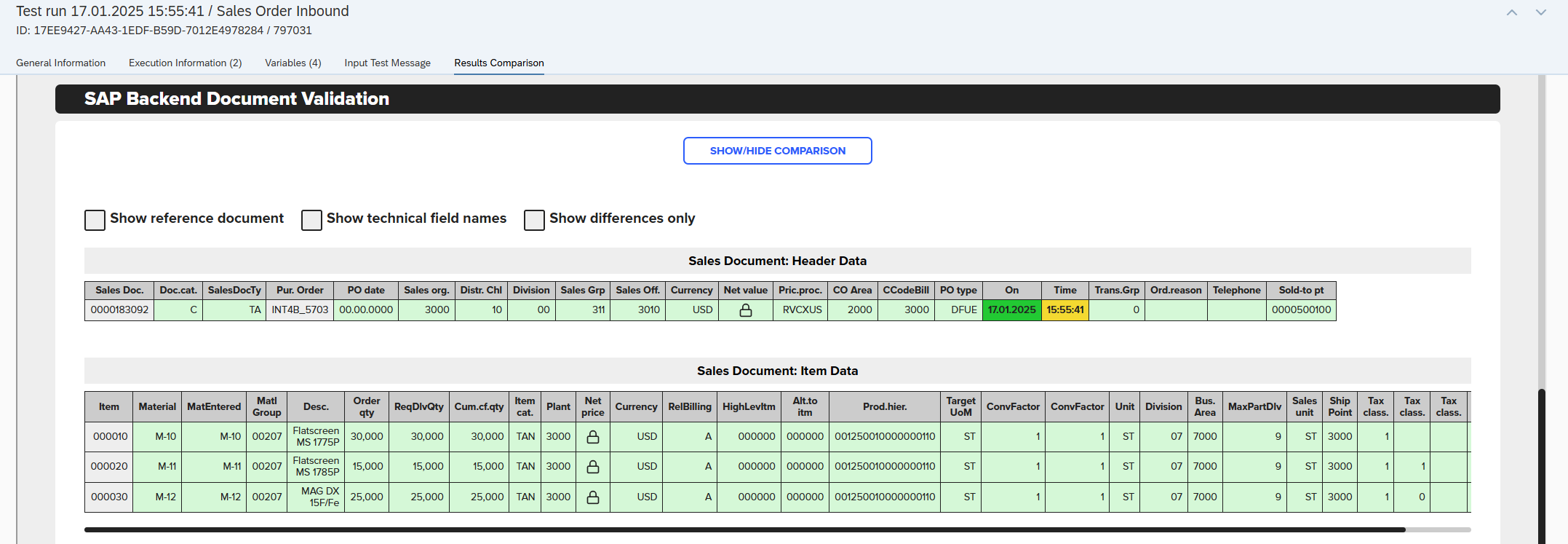

SAP Backend Document Validation

Validation of business documents is available in Int4 APITester only

Backend validation feature connects to the SAP S/4HANA or other ABAP backend databases, extract the relevant documents and compares them accordingly to DB Validation Ruleset assigned to the Automation Object.

Depending on Automation Object settings, backend document can be fetched during test case creation or test case execution.

The comparison is executed on field level, for each of the defined tables and fields. Similarly to the Payload Validation, the expectation is that documents will be identical. Known and expected differences can be defined in the DB Validation Ruleset for fine control of the results.

By default, only current execution data is shown. Additional checkboxes are available to control report display

Show reference document - shows the reference document data in gray

Show technical field names - shows the technical names of tables and fields

Color coding is used to highlight report results

Light Green - data is the same or matching, based on DB Validation rules

Green - difference is expected, based on Change Request Payload validation rules

Yellow - difference is acceptable and triggers a warning, the test case will pass

Red - difference is unexpected and triggers an error, the test case will fail

The arrows on the first left column allow jumping between errors quickly. Thanks to them, you can jump into the next/previous row with differences

Read more here: Database Validation Rulesets

Rerunning and revalidating test cases

To rerun or revalidate test cases use Test Runs Report